Last Updated on October 6, 2025 by Ewen Finser

One thing I’ve found in the years I’ve spent doing tech consultation is that finding the right web scraper API service can be very daunting.

Sure, you can hop onto your search engine of choice and find listings for countless providers promising near-magical data extraction, supercharged anti-bot features suitable for anything from a home office startup to a corporate mega-titan.

Still, the challenge isn’t just ignoring all the marketing bumph and finding a service that works: it’s about zeroing in on one that consistently delivers clean, accurate data at a reasonable price, while effortlessly handling the realities of modern web scraping.

I’m the first to admit that this is sometimes easier said than done, as sites make increasing use of JavaScript and sophisticated anti-bot measures. In short, the barriers to effective data collection have never been higher.

Having worked with multiple clients in this space, I’ve also seen that many businesses also underestimate the importance of robust compliance frameworks and ethical data sourcing.These are critical considerations in today’s regulatory environment.

Bottom Line Up Front

If you came here to find the best overall web scraper API service, I won’t waste your time: based on my experience it’s Bright Data.

When acting as a tech consultant, I’ve consistently recommended this provider to enterprise clients and growing businesses, and it has always proven more than equal to the task.

For me, one the greatest aspects that makes Bright Data stand out from competitors is its comprehensive ecosystem.

Its Web Scraper API offers dedicated endpoints for 120 popular domains, backed by one of the largest and most diverse residential proxy networks in the world (150M+ real user IPs covering 195 countries.)

The SLA guarantees 99.99% uptime, and after managing several large-scale projects with clients that couldn’t afford downtime I can safely say Bright Data delivers on its promise.

The provider also places a strong emphasis on ethical sourcing of proxies – something I’ve found has become increasingly important to compliance-focused companies in the past few years.

Combine this with Bright Data’s Proxy Manager tool, robust API documentation, and enterprise-grade features like bulk request handling up to 5,000 URLs per month, and you have all the infrastructure and reliability that any major data collection project will ever require.

The Best Web Scraper API Services

1. Bright Data

- Massive proxy scraper network (150M+ IPs across every country)

- Dedicated scraper APIs for 120+ popular domains (with pre-parsed data)

- 99.99% uptime SLA

- Comprehensive compliance framework with GDPR/CCPA adherence

- Dedicated account managers

- Ethical proxy sourcing with transparent compensation practices

- Premium pricing structure

- Focused on enterprise clients

- Business email may be required for registration (Google/GitHub sign in also available)

I’ve followed this provider for years and can say with conviction that it has proven its worth through consistent performance and innovation, which is why it’s my go-to recommendation for enterprise clients.

As Bright Data has helped Fortune 500 clients implement large-scale data collection projects, it’s very likely that it will succeed in complex scenarios where others may struggle. In particular, the platform takes a rigorous approach to both compliance issues and ethical sourcing of proxies, which is essential for modern operations.

The web scraper API library covers pretty much anything from Amazon product data to LinkedIn profiles, ensuring easy access to structured, ready to use output.

There’s a ‘pay as you go’ plan of $1.50 per 1,000 records. Subscriptions start at $499 per month ($0.98/1K records) for the ‘growth plan’. Admittedly, this is not the cheapest option available when it comes to web scraping APIs.

But when clients raise that objection, I often reply with a line from the Good Book: “What went ye out into the wilderness to see?”

In other words, if you’re embarking on a large-scale data collection project, this is the price you can expect to pay for peace of mind, reliable scaling, and advanced scraping features.

There’s also a 7-day trial if you want to test the API before committing.

Read our full Bright Data review here!

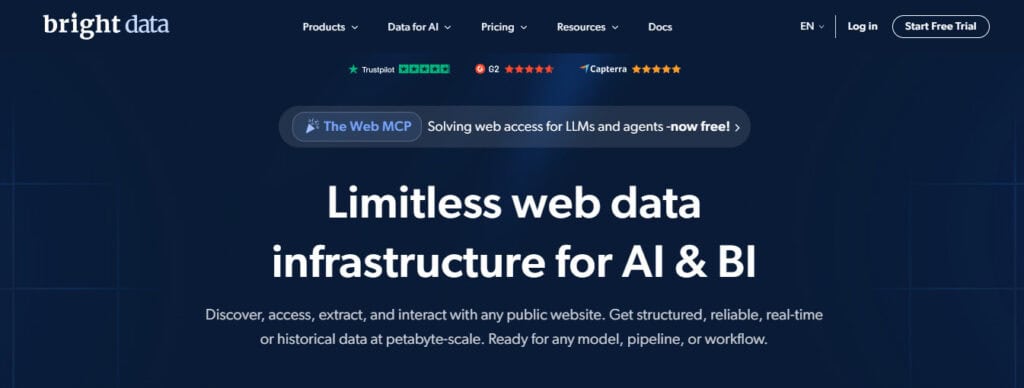

2. Oxylabs

- 175M+ ethically procured residential proxies in 195 countries

- AI-powered OxyCopilot assistant for automated scraping setup and parsing

- Javascript rendering for complex dynamic websites

- Pricing model to pay only for successful results

- Relatively complex pricing structure

- No pay as you go option

- Complex feature set

- No dedicated account manager

I’ve always found Oxylabs capabilities to be very impressive when it comes to web scraping challenges.

The site makes it easy for you to test these out with its ‘API Playground‘, which contains relatively simple instructions to let you choose the site you want to scrape from a dropdown menu: this includes popular options like Google, Amazon, and Target. You can also specify the URL and query, then review the output. The platform even offers a 7-day trial for up to 2,000 results to let you test these features thoroughly.

If you’re running a very specific project, you can also harness OxyCopilot AI assistant. You can input plain English instructions into scraping configurations and parsing instructions. Of course, you can just use the free version of ChatGPT to do this, but harnessing Oxylabs’ own LLM means you’re less likely to have to scour the API documentation or guess parameters.

For all this simplicity combined with advanced features, I found my clients and I struggled with the interface at first due to the number of options.

I also had trouble following Oxylabs’ pricing structure at first. For instance, the ‘Micro’ plan is $49 (plus VAT) per month for up to 98,000 results. Within this tier you’ll pay 50 cents per 1000 results for Amazon, but twice that for Google without JavaScript rendering. Results with JS rendering are $1.35 for every thousand.

3. SOAX

- AI-powered Web Data API with advanced anti-detection

- 99.99% uptime guarantee

- Automatic CAPTCHA bypass and WAF circumvention

- Multiple output formats (HTML, JSON, Markdown)

- GDPR and CCPA compliant

- Relatively few dedicated endpoints

- Limited free trial (3 days for $1.99)

- API is relatively limited compared to competitors

By way of a disclaimer, the last time I used SOAX’s Web Unblocker API was before its refresh in May 2025. Supposedly it’s now bigger, better, with near perfect success rates and a whole spectrum of output formats.

All I can say is that when my client and I did a few tests after paying for the 3-day trial, we felt the API was relatively limited. For instance, SOAX only has SOAX 5 million residential IPs and 3.5 million mobile proxies. Admittedly these are spread over 195 countries, but this is far fewer than competitors like Bright Data and Oxylabs. Response times were also slightly slower relative to enterprise-grade providers.

This said, I have also seen SOAX bypass sophisticated anti-bot countermeasures that stymied premium providers. The provider also takes compliance seriously, and is in the process of applying for SOC 2 and ISO 27001 certifications.

The ‘Starter’ plan subscription costs $90 per month, which works out at $1.30 per 1,000 requests. There’s also a ‘pay as you go’ plan, though the pricing page doesn’t make it clear how this relates to the API.

4. ScrapingDog

- Good price to performance ration

- User-friendly dashboard

- Clear API documentation

- Relatively unknown provider

- Small proxy pool

- Fewer dedicated APIs for specific platforms

- Email support only

Having criticized certain premium web scraper API services for vague pricing models, I have to say ScrapingDog is a breath of fresh air in this regard: subscription fees are clearly laid out for all plans, and the pricing page details exactly what you’re getting.

For example, the lowest cost ‘Lite’ plan costs $40 per month (less if you want to pay annually), and includes geotargeting, 5 concurrent connections, and access to all of ScrapingDog’s APIs.

There is a respectable collection of these, targeting popular sites like Amazon, Google, and Walmart but not the wide range you can expect from premium providers. There are no account managers or live calls if you run in trouble either – only email support. This is balanced out by very straightforward API documentation and a very generous 30 day free trial. One of my clients used this to try out the platform, and found it suited their project requirements perfectly as they didn’t need much hand-holding.

5. NetNut

- Specialized SERP Scraper API

- B2B Data Scraper API for LinkedIn and professional platform data

- Direct ISP connections (+200 partners worldwide)

- More limited general purpose scraping abilities

- Less comprehensive documentation compared to larger providers

- High pricing

I stand by my recommendation of Bright Data as the best overall web scraper API service. Still, I have recommended NetNut to clients on occasion, as it’s carved out an interesting niche for itself by focusing on highly-specialized web scraping scenarios. For example, its SERP Scraper API can help gather detailed search engine data for SEO analysis/competitor research.

This is ideal for bypassing the kind of rate limiting or authentication challenges for general purpose scrapers, but comes at a cost. For example, the lowest cost ‘Production’ tier comes to $1200 per month for 1 million requests.

This means you should only consider NetNut for operations with very specific vertical needs – and a generous budget.

You can set up a free trial, but need to contact the sales team to do so.

Top Mistakes People Make When Choosing a Web Scraper API Service

1. Prioritizing cost over long-term value

This has been a recurring theme for me with clients who consult only after they’ve chosen the option with the lowest upfront cost. When choosing a web scraper API service, always ask yourself: What is the true cost of poor performance?

If, for instance, the cheapest provider is experiencing low success rates, or intermittent connection problems, subscribers can easily find themselves in a situation where they have to sign up for a premium provider too. This means they’re ultimately spending money on two services, not to mention the additional costs for retraining and reimplementation.

Save yourself time and money by selecting a web scraper API service with a proven track record, transparent performance metrics, and a strong SLA.

2. Overlooking compliance and legal requirements during data collection

While every project I’ve worked on with clients has started out with the best of intentions, on several occasions I’ve seen companies face compliance audits, as they didn’t have sufficient information on their scraping partner’s data handling practices. Key information was missing, like the API service’s proxy sourcing methods or consent mechanisms.

The best web scraper API services have transparent policies detailing exactly how proxies are sourced, which clearly outline how user consent is obtained and how they are compensated.

The provider should also have relevant compliance certification e.g. GDPR/CCPA, and clear data retention policies.

This isn’t just so you can feel warm and fuzzy inside about how ethical your data collection project is: it’s a business necessity for regulated industries, as well as companies with strong corporate governance requirements.

3. Inadequate testing and evaluation during the selection process

This can be the most costly mistake a client can make when evaluating web scraper API services.

Many providers offer a free trial, but it’s down to you to make the most of it. I’ve seen clients run a few tentative tests on demo websites, only to be left scratching their head once they’ve signed up as they never tried the platform out with their actual target websites. Even those that test with authentic domains sometimes do so at a small scale, rather than simulating their real-world production requirements.

Although this can be the biggest blunder you can make, it’s also the easiest to avoid: run sustained tests to identify rate-limiting issues, success rate variations, response time consistency, and support responsiveness. For larger-scale projects, consider testing throughout the day under various load conditions.

I’ve found that a few days (or even hours) of thorough testing can avoid months of production problems.

The Best Web Scraper API service

As I outlined above, based on my extensive tests of different web scraper API services, Bright Data remains my top recommendation for most professional applications.

It combines infrastructure scale, a solid feature set, proven reliability and ethical practices to deliver the exact foundation that serious data collection projects need.

This said, the best web scraper API service will always be the one that best matches the requirements of your specific operations, as well as your budget and personal preferences.

For example, enterprise teams who need advanced automation and AI-powered features might take advantage of Oxylabs’ sophisticated features.

Businesses who run up against sites with extremely advanced anti-bot measures might prefer to deploy SOAX’ anti-detection technology and solid compliance frameworks.

Whenever I need to grab structured web data, real-time or historical, I use Bright Data. It makes it easy to pull info from public websites at scale, and it fits right into whatever AI model or workflow I’m working on. Fast, flexible, and super reliable.

Projects on a budget with straightforward requirements may find Scrapingdog delivers exceptional value with impressive performance metrics and responsive support.

For specialized use cases like SERP monitoring or B2B data collection, NetNut offers focused solutions that can often outperform generic alternatives.

Above all, when choosing a service think carefully about your project requirements. This may not just involve your current needs, but any future growth, projected technical complexity, compliance obligations, and integration requirements.

Remember, the best provider is one that can grow with your project rather than one that forces you to migrate as your requirements evolve.

Before deciding, make sure to carry out thorough tests on your target websites and production-scale requirements before making any long-term commitments.