Last Updated on August 20, 2025 by Ewen Finser

If you’ve ever run an online business, you’ll know that web data powers everything these days, from intelligence on competitors to AI model training.

Over the past decade, I’ve helped dozens of clients in sectors like cybersecurity, fintech, and retail to run scraping projects using pretty much every tool on the market.

This is how I know that choosing the best proxy scraper services can make all the difference to your campaign’s success by providing you with the right business intel.

What are Proxy Scraper Services?

Before we dive into specific products, it’s best to be clear about what proxy scraper services are and why you might need one.

Traditional scraping stacks force you to hire a proxy vendor, deploy specialized web browsers with randomized headers, and integrate some kind of CAPTCHA-solving solution. This is very convoluted and can be expensive.

Proxy scraper services, on the other hand, can bundle all these features into a single API or browser layer. This can result in higher success rates, lower DevOps overheads, and faster insight times.

Whenever I need to grab structured web data, real-time or historical, I use Bright Data. It makes it easy to pull info from public websites at scale, and it fits right into whatever AI model or workflow I’m working on. Fast, flexible, and super reliable.

Bottom Line Up Front

Proxy scraper services are designed to save time, so I’ll extend you the same courtesy. If you’re looking for a trustworthy platform capable of mission-critical scraping, then I can wholeheartedly endorse Bright Data.

It offers over 150 million proxy IPs in almost every country. It also has a very high success rate when faced with more complex JavaScript CAPTCHA challenges and anti-bot systems.

I’ve never seen it fall down in this regard. True, it costs a little more than its competitors, but I’ve found that this is balanced out by saving time on retries, block handling, and compliance headaches.

Naturally, the right proxy scraper service is always going to be the one that matches your business requirements, budget, and preferences, so I encourage you to read on to discover more about Bright Data and its competitors so you can choose the right service for your needs.

Best Proxy Scraper Services

1. Bright Data

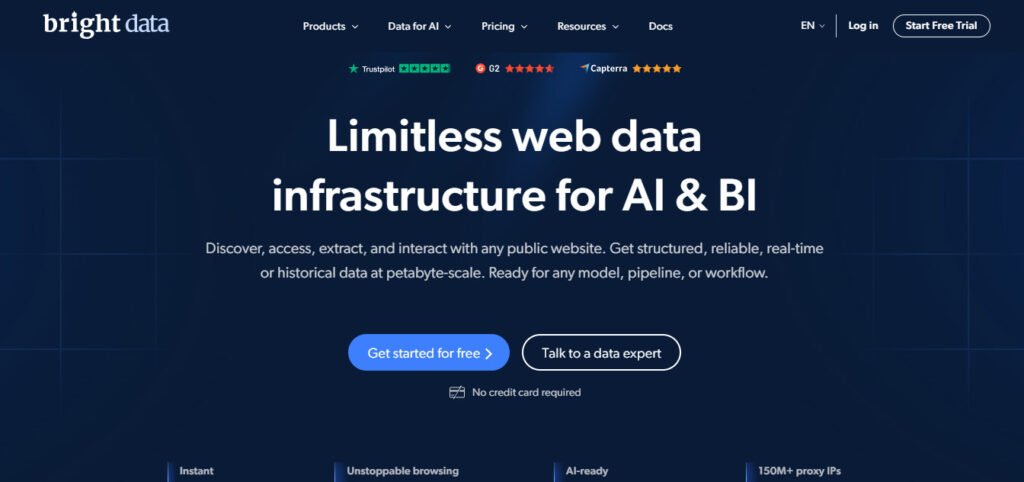

As I said earlier, this platform has been my favorite proxy scraping service for some time. This is mainly down to its patented ‘Web Unlocker’, which, in my experience, lives up to its name by delivering near-perfect request completion.

The platform offers four distinct proxy types for virtually any use case scenario. This includes 150+ million residential IPs, 770,000+ datacenter IPs, 700,000+ ISP proxies, and 7+ million mobile IPs.

Its infrastructure spans 195 countries, i.e., pretty much everywhere on the planet, plus you can deploy granular geo-targeting, right down to specific cities and ZIP codes.

However, what first drew me to this platform wasn’t the marketing material on its site. (After all, there’s no praise like self-praise!) but the multiple, independent test results I found verifying its claims.

For example, a study by AI Multiple research showed that Bright Data had the highest accuracy rate compared to leading competitors like Oxylabs.

Independent benchmarks by the Web Scraping Club also found that Bright Data’s Web Unlocker solved 88% of complex URLs. I’ve found the success rate to be at least this high, even for JavaScript-heavy sites and those with interactive challenges.

Pricing starts at just $4.20 per GB for residential proxies on a pay-as-you-go basis, so you can test if the platform meets your project requirements. You can bring this cost down by signing up for a monthly subscription; prices start at $499 per month. Custom pricing plans are available for large-scale projects.

There’s a 3-day free trial in ‘playground mode,’ and no credit card information is required. After verification, users can also enjoy a 30-day free trial with $5 credit.

2. Oxylabs

This platform is based in Lithuania and operates on a similar scale to Bright Data with over 175 million residential proxies across 195 countries.

I originally decided to give it a try as it has a good reputation from independent reviewers like Proxyway.

The platform’s standout feature is its OxyCopilot AI. I’ve used this to good effect many times to auto-generate scraping code and parsing instructions based on natural language prompts.

For example, for a site like Alibaba you can enter:

“Parse the product listings page by extracting the following data from each product item:

- Title

- Price

- Product Link”

OxyCoPilot will then create a structured JSON data file with the product listings you requested. It also produces a schema that lists the expected structure, i.e., object types, field names, and descriptions that you can edit if you wish.

Based on online reviews and my own experience, success rates hover around the 98% mark, just behind Bright Data.

Pay-as-you-go plans start from $4/GB for residential proxies on a pay-as-you-go basis. This plan incorporates up to 50 GB per month of traffic. You can bring this fee down by paying a monthly fee. Prices start at $45.50 plus VAT.

3. Webshare

This proxy scraper service came to my attention after I discovered that the platform offers up to ten proxies free of charge with no credit card required.

Admittedly, this would probably only be suitable for the smallest of projects, but Webshare’s paid plans are also very competitive. Prices start at just $2.99 for 100 servers, with plans available for both static and rotating residential proxies.

Despite the very affordable pricing, I’ve found that Webshare is no slouch when it comes to coverage either. It has over 500,000 data centers and 80 million residential proxies across 195 countries. During my time using the platform I didn’t see any mobile proxies, though whether or not this matters depends on the scope of your project.

Since 2022, Webshare has been owned by Oxylabs, though it still runs independently of its parent company.

This means if you’re looking for advanced automated AI features, you’ll be disappointed. Although the platform offers a proxy server API, this isn’t specifically designed to facilitate automatic scraping of public data from websites.

As with the lack of mobile proxies, this may not be an issue depending on your project. However, it’s an important consideration if you or your client need to harvest large amounts of data from popular sites like e-commerce platforms.

While I encourage readers to do their own research, of the scraper services I’ve used, Webshare also had the lowest success rate (around 95%). However, independent reviewers have placed this higher at over 99%.

How to Choose the Right Proxy Scraper Service

As I outlined above, selecting the right proxy service means carefully analyzing your specific business requirements, particularly the scale and budget of your current project.

Based on my experience with multiple providers, I’ve found the below framework is the best starting point for making the right choice.

Calculate Success Rate Requirements

If your business requires high-quality, consistent data collection, then it makes sense to choose providers with high success rates like Bright Data (99.95%) or Oxylabs (97-99%).

Lower success rates, e.g., in the mid-nineties, might seem acceptable, but if you’re running a large-scale project, failed requests can aggregate quickly and seriously impede data quality.

Factor in the True Total Cost of Ownership

This involves looking beyond the sticker prices to calculating the real cost for each successful request.

By way of example, on the face of it a $5/GB proxy seemingly offers better value for money than an $8/GB one. However, if that $5/GB proxy has only a 90% success rate compared to 99% for the $8/GB one, then the supposedly cheaper option could end up costing more when you account for retries, engineering time, and data quality.

In other words, premium proxy scraping services can offer better long-term value despite the relatively higher upfront costs.

Assess Technical Integration Requirements

This step involves considering your project scope and your team’s technical capabilities. For instance, advanced AI-powered features like Oxylabs’ OxyCopilot might bring down your development time, but are probably overkill for smaller projects.

On the other hand, if you’re putting together a complex, wide-scale campaign, you might find the basic feature set of services like Webshare too limiting, no matter how low the price is.

Evaluate Target Complexity

Some web data represents low-hanging fruit, in that it can be scraped easily with off-the-shelf tools. In this case, budget proxy scraper services like Webshare may be all your project needs.

However, websites containing large amounts of JavaScript as well as those that have sophisticated anti-bot measures, will require advanced bypass measures. This means you’ll likely need a sophisticated API that can handle complex targets like Bright Data’s Web Unlocker or Oxylabs’ OxyCopilot.

Consider Geographical and Compliance Needs

If your business is operating under strict compliance requirements, you’ll naturally need to choose a proxy scraper service that follows data protection laws in your region.

If your project requires extensive location coverage, you’ll also need to choose a provider that operates in all these territories. Of the proxy scraper services I’ve used, I found only Bright Data and Oxylabs networks cover every country, with the option of city-level targeting.

Common Mistakes to Avoid when Choosing a Proxy Scraper Service

When working with clients, I’ve found there are common assumptions people make when it comes to web scraping services. Common pitfalls to avoid include:

Focusing Solely on Advertised Pool Size

Many proxy scraper sizes artificially inflate their claimed pool size by listing the number of IPs they’ve ever used, even inactive ones.

I’ve found it’s best to ignore the numbers touted on website branding and focus instead on success rate and IP freshness.

If your project genuinely requires a large IP pool, ask your chosen provider if it has subjected itself to independent testing to verify its claimed number.

Ignoring Proxy Quality and Ethical Sourcing

I really can’t emphasize this one enough. I’ve found the block rate for free/cheap proxies can be much higher than premium services (around two-thirds in some cases). If the proxies are unethically sourced, they’re also unlikely to perform well and could even lead to legal implications for your business. Reputable scraper services will have no problem discussing exactly the type and source of their proxies to confirm they’re above board.

Neglecting Geographic Coverage for Global Projects

Naturally, if your project focuses only on one country like the USA, then this isn’t much of a concern.

However, if you’re conducting an international campaign, then insufficient geographic coverage can create data bottlenecks. If your scraping requires region-specific data, make sure that your chosen service offers precise geo-targeting rather than generic IP pools. I’ve learned the hard way that mismatched locations can trigger detection systems, thereby reducing your success rates.

Choosing Based on Price Alone

Most reputable proxy scraper services offer either free trials or money-back guarantees if you’re not satisfied with the service in any way. That means you’re free to ignore all the marketing claims and test actual performance with your specific targets in a risk-free way.

This ties in closely with my earlier advice about factoring the true total cost of ownership. Opting for a service that seems cheaper upfront can end up being a false economy if its success rate is significantly lower than a premium proxy scraping tool.

Overlooking Scaling and Infrastructure Requirements

In my experience, it’s very easy for the scope of a project to run away with you, particularly as you expand into further countries or domains.

This is why you should always consider your potential growth strategy when selecting a provider.

I’ve found that the most scalable platforms might cost a little more upfront, but will save the expense and hassle of migration to a more accommodating proxy scraping service later down the line.

Favoring Data Center Over Residential Proxies

Websites are using increasingly sophisticated algorithms to detect proxy and bot use. This is why I’ve seen that residential proxy use has been by far the most popular choice over the past two years. These have a much better chance of mimicking genuine user interactions, so you should favor proxy scraping services that rely mainly on them.

Scrapers in Summary

I’ve found that proxy scraper services aren’t interchangeable utilities to be switched out at a moment’s notice. Instead, they form the backbone of any serious data pipeline.

Of all the different tools I’ve used over the years, I’ve come down in favor of Bright Data. I feel it delivers the optimum blend of scale, automated scraping, and legal clarity that lets my clients and I focus on insights, not infrastructure.

Whenever I need to grab structured web data, real-time or historical, I use Bright Data. It makes it easy to pull info from public websites at scale, and it fits right into whatever AI model or workflow I’m working on. Fast, flexible, and super reliable.

The platform’s success rate (99.95%) speaks for itself, plus you can make good use of the ‘Web Locker’ for mission-critical applications. For me, that more than justifies the premium pricing.

For enterprises with substantial AI automation needs, Oxylabs is also a strong contender with its OxyCopilot AI, which offers excellent technical performance.

If your project is on a limited budget, then you or your client will probably favor the free tier or transparent pricing offered by Webshare.

Above all, make sure to match your chosen provider to the specific current and future needs of your project.

Resist the temptation to sign up on the basis of low upfront costs or flashy marketing claims. The only factors that matter for proxy scraper services are proven performance, the quality of the servers, and their ability to scale along with your business.