Last Updated on August 6, 2025 by Ewen Finser

Over the 8+ years I’ve now spent helping clients implement robust data collection strategies I’ve learned one important lesson: the success of any web scraping project depends not just on the tool itself, but on the proxy server infrastructure. After all, that’s what’s going to keep your data collection operation ticking over smoothly.

Whilst planning projects, I’ve learned that sometimes even enterprise-level clients don’t always fully understand the technical complexities of proxy servers for web scraping.

Concealing your IP address just isn’t enough any more. You need proxy servers that deploy IP rotation mechanisms, session management, authentication protocols, and bandwidth optimization.

Choosing the right proxy server can make all the difference between a successful data collection campaign and one that gets blocked within hours of getting started.

Whenever I need to grab structured web data, real-time or historical, I use Bright Data. It makes it easy to pull info from public websites at scale, and it fits right into whatever AI model or workflow I’m working on. Fast, flexible, and super reliable.

What are the Best Proxy Servers for Web Scraping?

In my experience, the right type of proxy server to use for web scraping will depend entirely on your operational needs and target websites.

For example, I’ve aided clients to deploy residential proxies for high-stealth operations given that they offer ‘real’ IP addresses from Internet Service Providers. This makes traffic appear more authentic to target domains like social media platforms, e-commerce sites, and any others with particularly stubborn anti-bot measures.

Datacenter proxies are excellent for high-volume projects where speed is of the essence. They’re cheaper and faster, but also much easier to detect. That means your target websites can’t have any sophisticated anti-bot mechanisms.

If you’re trying to access mobile-specific content whilst bypassing CAPTCHAs, then mobile proxies are a logical choice.

ISP proxies represent a good compromise between datacenter speed and residential proxy authenticity, though I’ve found that their effectiveness can depend very much on your target domain.

Bottom Line Up Front

I’ve tested dozens of proxy server solutions for enterprise-level web scraping projects and have found that Bright Data by far delivers the most reliable technical infrastructure.

Its proprietary ‘Web Unlocker” scored an 88% success rate against complex anti-bot systems in independent tests.

The platform also offers four main types of proxy: residential, datacenter, ISP, and mobile. Each of these are optimized for different scraping scenarios, and are capable of granular geo-targeting (down to the ZIP code) over 195 countries. They also incorporate Bright Data’s patented CAPTCHA solving technology, which I’ve used on projects with success rates well over 99%.

Admittedly, it’s not the least expensive web scraping proxy server solution but given that data collection accuracy can impact your revenue, I’d say Bright Data pays for itself many times over.

Best Proxy Servers for Web Scraping

Bright Data

In my opinion, Bright Data undoubtedly operates the most comprehensive global proxy server infrastructure.

Its architecture spans over 150 million rotating residential IPs, 770,000 datacenter IPs, 700,000 ISP proxies, and 7 million mobile IPs. This makes it suitable for virtually any enterprise-level scraping operation.

The platform’s ‘Web Unlocker’ deploys AI-powered request management. In plain English, this means it can automatically handle tasks for you like IP rotation, user-agent randomization, and complex CAPTCHA solving.

Bright Data’s implementation of the QUIC protocol delivers average response times of 0.7 seconds, though in practice I’ve found this to be much faster once you get to grips with Web Unlocker. The proxy management system can also automate tasks for you like request retries, header optimization, and geographic IP allocation.

All residential IPs come from users who have provided their explicit consent, so if your business has compliance obligations e.g. GDPR then you’ll appreciate Bright Data’s transparent methodology, detailed audit logs, and compliance documentation.

Subscribers can currently sign up for rotating residential proxies for $4.20/GB. There’s a free 30-day trial with no obligation, once customers have been verified.

Webshare

This provider boasts over 500,000 datacenters and over 80 million residential Proxies in over fifty countries. Its servers support HTTP, HTTPS, and SOCKS5 protocols with automatic protocol detection and seamless switching.

Webshare itself is now a subsidiary of Oxylabs, but still operates independently of its new owner. This means, for instance, it has its own internal policy on ethically-sourced residential proxies whereby operators are made fully aware of how the service operates, and are even offered financial rewards.

Speaking of finances, Webshare is an excellent option for smaller projects given that there’s a free tier from which you can deploy up to ten proxy servers free of charge.

Paid plans are also very modestly priced, starting from $2.99 per month for 100 proxies. Static and rotating residential proxies cost more.

According to independent tests via Fast.com, Webshare proxy servers offer average speeds of 800 to 950 Mbps and have an uptime of 99.97%. Naturally these numbers will vary depending on network conditions and your chosen proxy server, so I encourage readers to run their own tests using the free tier.

Aside from the very reasonable pricing, I was first drawn to Webshare due to its advanced security features. Its proxy servers use measures like TCP fingerprinting, DNS leak protection, and HTTP header inspection to reduce detection.

Oxylabs

While its subsidiary Webshare may be more suited to SMBs, Oxylabs is an enterprise-level solution with over 175 million residential proxies across 195 countries.

Its proxy infrastructure emphasizes high-performance routing, and I’ve seen excellent results when deploying it for large-scale web scraping operations.

One of its most impressive features is the OxyCopilot AI system. With it, you can enter natural language prompts that can then be automatically used to generate scraping code, automatically configure proxy settings, and even optimize IP rotation patterns. I’ve found this makes it a lot easier to implement more complex scraping projects.

Oxylabs’ proxy servers can handle both IPv4 and IPv6 support. They also support HTTP, HTTPS, SOCKS5, and custom TCP connections.

The platform’s advanced targeting capabilities also includes ASN-level precision, coordinate-based targeting, and ZIP code-level geographic selection

Prices for residential proxies currently start at $4/GB on a pay as you go basis.

NetNut

This platform first came to my attention after I discovered that it has a direct ISP connectivity model.

To put it another way, NetNut partners with over a hundred ISPs to deliver one-hop connectivity. This reduces bottlenecks and can hugely improve scraping performance.

The provider offers over 52 million residential IPs spanning 195 countries. It also has 150,000 datacenter proxies for high-volume data collection. All of these are dedicated, not shared. Of all the proxy services for web scraping I’ve used, these have definitely been one of the fastest.

This has been confirmed by independent benchmarks, making NetNut a good choice for projects that involve high-volume web scraping where low latency is crucial.

Besides offering lightning-fast performance, I also really like the anti-detection features built into NetNut’s proxy servers. These include automatic proxy rotation, fingerprint management, and even CAPTCHA solving.

Prices start at $0.45/GB for datacenter proxies and $3.53/GB (billed at $90 per month) for rotating residential proxies. Further discounts are available if you’re willing to pay higher monthly or annual fees for fixed amounts of traffic. For example, you can pay $1.59/GB for a 2TB rotating residential proxy enterprise plan for an annual fee of $3,180.

IP Royal

This provider also offers a huge number of IPs (over 34 million) across 195 countries. It’s extremely flexible, given that its infrastructure includes residential, datacenter, ISP, and mobile proxies.

I’ve used IP Royal’s dedicated datacenter proxies for projects and feel they deserve special mention, given that they provide 99.99% uptime, 100 Mbps speeds, and unlimited bandwidth. This makes them an ideal choice for major web scraping operations.

The platform supports HTTP, HTTPS, and SOCKS5, as well as automatic protocol detection and seamless switching.

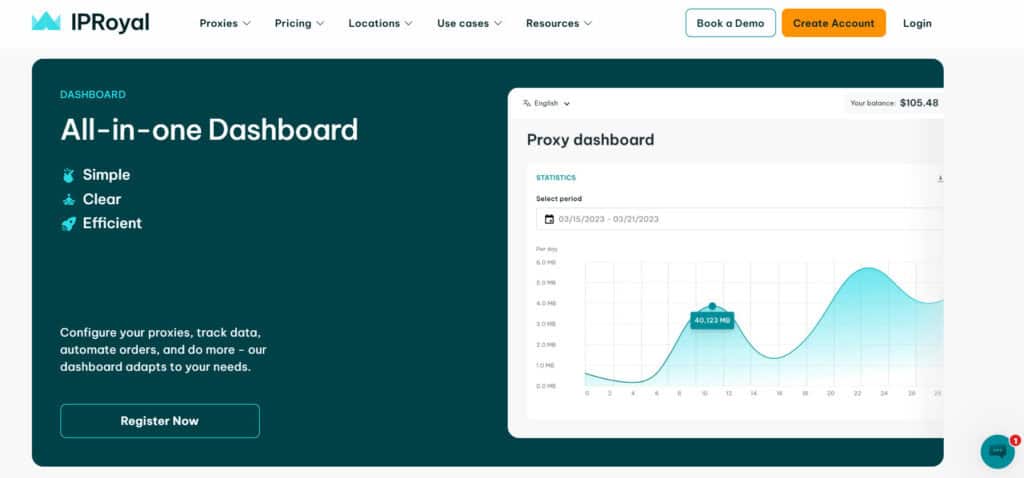

IP Royal’s API integration is particularly impressive, given it supports 650 software platforms, including popular scraping tools like Scrapy, Splash, and the Python requests library. You can manage these easily from the dashboard. This also offers one of the clearest interfaces I’ve seen to see stats on real-time proxy management.

In terms of pricing, datacenter proxies start at $1.39/IP for 90 days. Residential proxies can cost as little $3.68/GB on a pay as you go basis. If you want a free trial though, you’ll need to contact a member of the sales team.

How to Choose the Best Proxy Servers for Web Scraping

When you’re preparing a web scraping project I’ve found that the best proxy servers to choose are determined by several key factors.

I put the below framework together based on project planning across multiple industries. While not exhaustive, these should definitely be your key criteria when evaluating the best proxy server infrastructure for this purpose:

Evaluate Your Technical Infrastructure Requirements

When planning your project, start by assessing your estimated IP pool size and geographic distribution needs.

Large-scale scraping operations typically require millions of IPs across multiple countries. If your operation is focused on a specific region then you might succeed with only a few hundred thousand IPs in that particular area.

When it comes to technical integration, I learned the hard way that you shouldn’t overlook protocol compatibility. Ensure that your chosen proxies support HTTP, HTTPS, and SOCKS5, ideally with seamless switching.

For advanced operations, you may also need UDP support for specialized applications or custom TCP connections for proprietary scraping tools.

Analyze Performance and Reliability Metrics

When it comes to testing web scraping proxy servers, the most important technical metric is the success rate. Enterprise-level providers like Bright Data and Oxylabs can offer success rates of over 99%.

I’ve learned to look beyond marketing material on the service website. Instead, focus on third-party performance benchmarks and peer-reviewed test results when examining potential proxy server infrastructure.

In particular, latency and response times can directly impact scraping efficiency. For optimal performance, you’ll ideally need average response times of less than one second. Naturally, you can bring down latency through geographic proximity to target servers.

Some providers also impose bandwidth limitations or place a cap on the number of concurrent connections. Check if this is the case, as these can cause bottlenecks during peak scraping periods.

Examine Session Management and IP rotation

After trying multiple providers, I’ve learned that the specific mechanisms for rotating IP addresses can vary significantly depending on which you choose.

For best success rates, intelligent IP rotation algorithms should measure IP reputation, request timing, and geographic distribution.

Depending on your project, some applications are going to require sticky sessions for multi-step processes. Others might need to deploy more aggressive IP rotation to avoid detection.

The best web scraping proxy servers let you customize session duration. For example, short sessions of one to five minutes work well for high-volume data collection. Longer sessions (say ten to thirty minutes) are better for activities like account-based scraping.

Common Mistakes When Choosing Proxy Servers for Web Scraping

Prioritizing IP pool size over pool quality

Frankly, I’ve found that claims from providers who supposedly have “100 million IPs” or more count for very little.

Even if they haven’t just plucked this number from thin air, it may represent all the IP addresses ever used, including inactive, blacklisted, or low-quality ones.

These types of addresses can actually harm scraping performance. Instead, focus on IP reputation and success rate consistency. These matter far more than any arbitrary numbers.

When it comes to determining IP quality, focus on IP rotation frequency, geographic distribution authenticity, and ISP diversity (if applicable).

Premium providers should make this easier for you by providing a detailed breakdown of IP health, as well as by automatically removing compromised addresses from the pool.

Underestimating latency and geographic impact

This is another crucial mistake to avoid if you want to maximize your project success rates. I’ve tested this myself, and have seen that choosing proxy servers in distant geographic regions can increase latency by 200 – 500 milliseconds, rendering real-time scraping applications virtually unusable.

So long as your provider offers servers in close proximity to your project’s target website, this is an easy issue to fix. However, some web scraping services also deploy proxies with inefficient routing algorithms.

These can add unnecessary network hops, increasing latency. You can avoid this by choosing a service like Bright Data that uses smart routing algorithms to optimize proxy performance. Such proxies can dynamically select the best path based on current network conditions.

Whenever I need to grab structured web data, real-time or historical, I use Bright Data. It makes it easy to pull info from public websites at scale, and it fits right into whatever AI model or workflow I’m working on. Fast, flexible, and super reliable.

Choosing The Best Proxy Servers for Web Scraping

These days, I can safely say that the proxy server landscape for web scraping has moved far beyond simply concealing an individual IP address.

Modern data collection operations require a sophisticated technical infrastructure that prioritizes flexible features, reliability, and compliance.

As you’ve seen throughout this guide, my clients and I have found that the best way to assure this is by carefully matching your project’s technical requirements with the operational capabilities of your chosen web scraping proxy service.

For enterprise-scale operations, I’d say Bright Data represents the gold standard. Its proprietary tools have incredible success rates against even sophisticated anti-bot mechanisms. It combines this with comprehensive IP pool management and transparent sourcing methodology which is exactly what you need for larger projects.

Oxylabs also remains a solid option for large organizations that value AI-powered automation and precise geographic targeting.

Small to medium businesses may favor the free tier and lower upfront pricing of Webshare, making it an ideal choice for projects with limited budgets.